- Published on

Prompt Engineering Guides: Google vs. OpenAI – What You Actually Need to Know

- Authors

- Name

- Ajinkya Kunjir

Large‑language‑model documentation can feel like a fire‑hose. Below is a distilled guide for everyday Gen‑AI users who just want to write better prompts fast—without wading through 60‑page PDFs.

- 1 · Why These Guides Exist

- 2 · Shared Golden Rules

- 3 · Quick Cheat‑Sheet: What You Need to Know

- Quick Decoder

- 4 · Key Differences at a Glance

- 5 · TL;DR for Busy People

1 · Why These Guides Exist

| Guide | Primary Goal |

|---|---|

| Google Prompt Engineering Guide | Teach Gemini / Vertex AI users how to pair model settings (temperature, top‑K, top‑P) with advanced prompting patterns (CoT, ReAct, ToT) for production apps. |

| OpenAI Cookbook › GPT‑4 Prompting | Provide a minimal playbook for getting reliable answers out of GPT‑4—emphasising clarity, structure, and iterative testing. |

2 · Shared Golden Rules

- Say exactly what you want. Specific instructions > vague requests.

- Show a pattern. One‑shot/ few‑shot examples boost accuracy.

- Iterate. Tweak wording and model settings; re‑run; compare.

- Structure outputs. Ask for JSON/CSV when you need machine‑readable data.

- Control randomness. Lower temperature ≈ factual & repeatable; higher ≈ creative.

3 · Quick Cheat‑Sheet: What You Need to Know

A) Google Gemini / Vertex AI Users

| Do this | Why |

|---|---|

Pick the right model first (gemini‑pro text vs gemini‑pro‑vision multimodal). | Different capabilities + cost. |

Set sensible defaults – temperature 0.2, topP 0.95, topK 30, maxTokens only as big as you need. | Balances coherence & creativity; saves money. |

| System / Role / Context prompts | Google treats the entire prompt string as one chunk → you embed role or context inside the text. |

Use #Context: blocks (or a JSON schema snippet) to anchor the answer. | Reduces hallucinations; enforces format. |

Chain‑of‑Thought for reasoning – add "Let's think step by step." after the question (temperature 0). | Forces explicit reasoning → better answers for maths, logic. |

Advanced patterns (ReAct, Step‑back, Tree‑of‑Thought). | When basic prompting stalls, switch to these. |

Gemini Example – JSON extraction

System: Extract movie sentiment as JSON. Review: "A breathtaking masterpiece with minor pacing issues." Schema: {"sentiment": "POSITIVE|NEUTRAL|NEGATIVE", "notes": string} JSON Response: { "sentiment": "POSITIVE", "notes": "Visually stunning but minor pacing issues" }

Quick Decoder

| Setting | What it controls | Recommended default |

|---|---|---|

| Temperature | Governs randomness in token selection. 0 → deterministic (always choose the top‑probability token). 1 → highly creative / unpredictable. | 0.2 keeps answers mostly factual yet allows mild variety. |

| topP (nucleus sampling) | Keeps only the smallest probability mass ≤ p. topP 1 uses the full vocabulary; lower values remove fringe tokens. | 0.95 removes extreme outliers while preserving 95 % of useful vocabulary. |

| topK | Considers only the K most‑probable tokens before applying temperature / topP. | 30 = “use the 30 best candidates, ignore the rest” — coherent yet flexible. |

Mental Model

- topK caps the maximum candidate pool.

- topP trims ultra‑low‑probability outliers.

- Temperature spices the final pick: lower for facts, higher for creativity.

Rule‑of‑thumb presets • Factual queries:

temperature 0–0.3,topP 0.9–0.95,topK 20–40• Creative writing:temperature 0.7–0.9,topP 0.98,topK 40–100

Use these knobs together to balance coherence vs originality without blowing your token budget.

B) OpenAI ChatGPT / GPT‑4 Users

| Do this | Why |

|---|---|

Use the 3‑message pattern → system, user, optional assistant examples. | Mirrors how the API fine‑tunes behaviour. |

Give the model a role first – system: "You are a precise financial analyst." | Sets tone + knowledge scope. |

| Be explicit about style / length – e.g. “Answer in two bullet points.” | GPT follows formatting instructions well. |

Temperature guide: 0–0.3 deterministic · 0.4–0.7 balanced · >0.7 creative. | Quick mental model. |

JSON mode (response_format={"type":"json_object"}) or function‑calling when you need structured data. | Guarantees valid JSON; no post‑parsing headaches. |

| Few‑shot > Many‑shot – keep within context window (~128k GPT‑4o) but don’t bloat prompt. | Saves tokens; keeps core examples visible. |

GPT‑4 Example – Few‑shot classification

{ "system": "You are a support triage bot. Answer with 'LOW', 'MEDIUM' or 'HIGH'.", "user": "Classify: 'My screen occasionally flickers when waking from sleep.'", "assistant": "LOW", "user": "Classify: 'Laptop battery swollen and leaking.'" }Expected answer:

HIGH

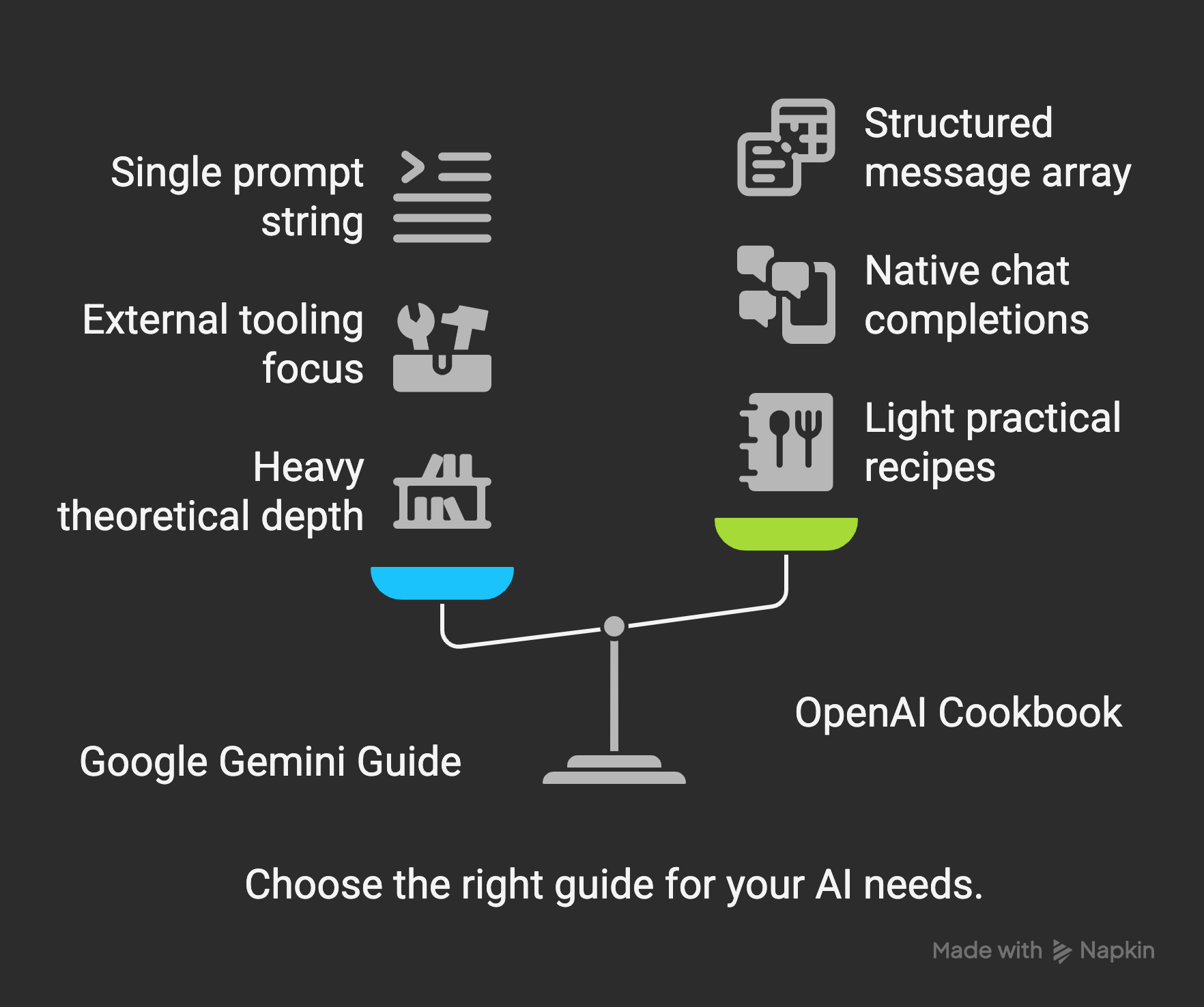

4 · Key Differences at a Glance

| Aspect | Google Gemini Guide | OpenAI Cookbook |

|---|---|---|

| Depth of theory | Heavy: sampling maths, multi‑step reasoning patterns. | Light: theory trimmed; focus on recipes. |

| Tooling focus | LangChain, Vertex AI APIs, external search & function calls. | Native chat completions + JSON / function calling. |

| Prompt scope | One big string (you manage roles inside). | Structured message array (system/user/assistant). |

| Advanced patterns covered | CoT, ToT, Self‑consistency, ReAct, APE. | Mainly CoT + few‑shot + JSON format. |

5 · TL;DR for Busy People

- State the task, the format, and any examples—up‑front.

- Clamp temperature ≤ 0.3 for factual answers; raise it for creative tasks.

- Ask for JSON if a machine will consume the output.

- Iterate: tweak words or model knobs, rerun, compare.

- Use Chain‑of‑Thought (

Let's think step by step) when the first answer feels wrong.

Stick to those five rules and you’ll harvest 80 % of the value from both GPT‑4 and Gemini—no 70‑page whitepapers required.

Further reading: Google Prompt Engineering Guide · OpenAI Cookbook